Google took the wraps off Project Soli during its Google I/O developer conference, quietly introducing a highly sensitive motion sensor that could reshape how we interact with close-range devices.

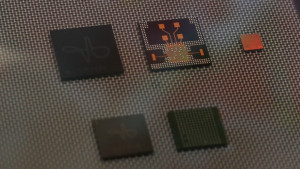

We’ve seen radar signals used in various ways, and generally in much larger sizes and forms than what Google unveiled at I/O. Project Soli, Google says, marks the first time radar technology has been used for gesture recognition. Soli comprises a miniature radar chip that measures just 11 millimeters square and runs at a fast 60GHz.

What if the devices disappear into the environment or just shrink, or you’re wearing them? Even if there’s no device, those motions are still useful to control things.

“We’re interested in new ways of interacting with technology,” explains Google’s ATAP Design Lead Carsten Schwesig. Part of this drive stems from the need to navigate smaller and smaller touchscreens, such as on a watch or activity tracker. Soli’s approach takes gestures we do on touchscreens, and separates them from the screen itself. The idea, says Schwesig, “is that you do these motions with your hand all day long, and they’re very comfortable. What if the devices disappear into the environment or just shrink, or you’re wearing them? Even if there’s no device, those motions are still useful to control things.

“With your hand, you can mimic these basic controls like sliders, or dials, or 2D surfaces. And if we can detect those.” Schwesig says, “it has interesting potential for interacting with Internet of Things type of devices, or wearable devices. That’s really our overall direction.”

Google is still hashing out the software side of the equation – “a huge, hardcore techy [digital] signal processing effort.” Schwesig says — and how to define the vocabulary of the gestures and interaction. In the demos at I/O, you could wave your fingers over the chip, and watch the range doppler image on the screens ahead of you as they tracked your fingers’ motion as measured by distance, velocity, and energy. Soli can extract information from those signals, learn to recognize the different in-air motions and apply them to specific actions, such as controlling a watch screen.

Soli’s range is currently up to 5 meters, although Schwesig said that the range can be adjusted, depending upon the intended application and how much energy is put into the sensor. For now, Google’s focus is on a range of zero to two feet, best for close-range hand interactions.

The micro-motions and fast motions of the “small” gestures that will typify the new gesture language that Project Soli can handle may not be for everyone, though. Even though Schwesig notes the motions are not unlike what we do every day, there is the potential for those with motor or mobility problems to be left out of this brave new interface revolution. That said, if the sensor’s interaction language takes into account the types of motions needed by those with disabilities, it could end up being a boon to that community. “That’s one of the great potentials of this technology,” agrees Schwesig.

Whether Soli will recognize its potential will depend a lot on how it gets implemented. And for now, it remains a work-in-progress, with no time frame attached to a developer release. “We’re really focused now on just making the technology work. Once we are ready to release this to developers and to users, we’ll start exploring those areas where existing technology doesn’t work very well for people.”